Have you experienced a technical SEO nightmare that stuck with you? Frightened you? Or perhaps caused you to lose a chunk of revenue?

Every SEO person seems to have their own peculiar horror story, something that they witnessed on a client website or something they did themselves that haunts them, a story they suppress.

Today we’re challenging SEO professionals, all of whom practice in the southern U.S. to open up and tell the world their answers to this question:

“What is the most revenue-impacting technical SEO issue you’ve seen and how did you overcome it?”

Patrick Coombe, CEO and Founder of Elite Strategies, Delray Beach FL

The lesson(s) to learn from all of this: don’t let amateurs work on your website. Our industry has a very low barrier to entry and a lot of times what happens is clients pay “professionals” to learn while they work, and make mistakes on live websites. Also, be very weary of 3rd party add-on’s especially when you don’t know what they do.

Stephanie Wallace, Director of SEO at NeboWeb, Atlanta GA

The majority of pages throughout the site had incorrectly in place canonical tags pointing to the homepage as well as a second incorrectly placed self-referencing canonical tag pointing to the non-trailing slash version of each page (which is the non-preferred version).

The team conducted a thorough canonical audit on the website and provided recommendations to remove the incorrect homepage canonicals as well as update the self-referencing canonicals.

Since recommendations were implemented organic traffic and revenue have rebounded substantially. Organic traffic has grown over 40% year over year; organic revenue has increased almost 60% year over year and increased over 140% compared to the first month after site relaunch.

Jeff Beale, Best-selling Author and Marketing Strategist at Mr Marketology, Atlanta GA

To solve this challenge, we added a dynamic title tag pulling in attributes from their database and the added a text block with a dynamic title wrapped in an H1. Beneath the dynamic title, we added a paragraph of custom relevant text on the main category pages. All paginate pages were referenced to the first main page with a canonical tag and prev / next replaced the numbered sub page navigation.

With this they saw a lift in their main term for the home page as well as increased to the first page for several of their category pages and even some of their product pages.

Adam Thompson, Director of Digital at 10X Digital, Bradenton FL

Often times it’s the simplest issues that cause the biggest problems. The largest revenue drops I’ve seen caused by technical SEO issues have been related to blocking Googlebot or not redirecting URLs.

It’s generally best practice to block Google from crawling a website while it is on the development server, either through robots.txt or robots meta-tags. The problem comes when the development team moves the website from the development server to the live server without updating these settings. Google is blocked from crawling (or indexing) the new website, and rankings can crash, dealing a severe blow to revenue. The fix is very simple – update the robots.txt file to allow crawling, and the robots meta-tag to allow indexing.

Another technical SEO issue I’ve seen frequently that can have a big impact on revenue is not redirecting old URLs that are no longer valid. This most commonly happens when launching a new website version – the old URLs are left to 404, and the website loses the link juice, rankings, and revenue it previously had.

I recently worked on a project where the website had lost 100,000+ pageviews (and the associated revenue) every month largely because proper redirects had not been implemented when they launched the latest website version. Correcting this issue usually is pretty straight forward – implement 1:1 redirects which 301 redirect each old URL to the equivalent new URL.

It is not sufficient to merely redirect all the old URLs to the homepage. For example, redirect /about.html to /about-us/ not to the homepage. It’s best to implement these redirects when the new website version is launched or as quickly thereafter as possible. However, I’ve seen positive results from implementing redirects several months later. So if you lost rankings and revenue due to a site update that happened a while back, there is still hope.

Emory Rowland, Founder at Leverable SEO, Atlanta GA

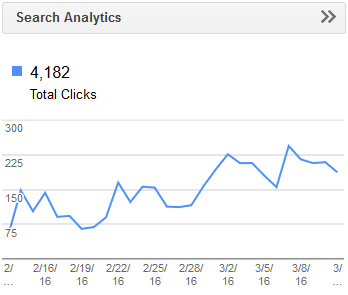

I got a chance to see for myself when I was auditing an ecommerce site for an industrial parts company. Revenue had suddenly dropped about one year previously. But why?

One URL told the story in Google Analytics. It had been a top product page, producing thousands in sales. Then, one day, for whatever reason, the developer changed the URL. It was never redirected to the new location.

What happened?

Naturally, the visits and revenue dried up from that old URL. There was no reason for search engines to keep sending visitors to a dead page. At that time it hit me just how much is at stake with redirects. Technical SEO really does preserve revenue.

How did I overcome this?

Sadly, it was too late to do anything about the old unredirected URL. It had been a 404 error for over a year and was now deindexed. We’d have to rebuild the page and start over. The only good that came out of this experience was in demonstrating to the client how important it is to handle redirects going forward.

A.J. Martin, Owner and Consultant at AtlantaSEOExpert.com

Solution: Move site to completely new CMS and employ canonical tags to each article while implementing a massive 301 redirect campaign. Site performance improved and articles began to rank very well on primary URL increasing overall organic traffic numbers.

Tom Shivers, Owner of Capture Commerce, Atlanta GA

In my evaluation I noticed an escalation of not found errors and the site was no longer indexed in Bing.

The robots.txt file was loaded by a security plugin they had installed, but that wasn’t the main problem.

I noticed that most of the not found errors were coming from the same directories. In one directory the pages had tabs that contained lists of related links all to other websites and none were nofollowed. They were heartily endorsing every website they linked to, yet it was free to be listed there, plus lots of broken links. Hmm, a potential link farm even though that was not the intent of the organization.

In another directory was a calendar with next and prev links to preceding or succeeding calendar pages and as I clicked I found I could go way out into the future to blank calendar pages. Hmm, an endless page generator.

The result: less errors and within a couple weeks, Bing had indexed a few thousand pages of the site and Google was sending more traffic.

If your site causes Google or Bing to waste its crawl budget, the search engines may not crawl all of your content or may not index changes quickly.

What’s your technical SEO nightmare?